What If Waterfall Was Right?

How the price of trying things shaped 60 years of software development

We love to mock Waterfall. It’s the meme of every agile talk, the “before” photo in every methodology slideshow. But here’s a question that’s been bugging me: what if waterfall wasn’t a failure of imagination?

What if it was the only rational response to the economics of its time?

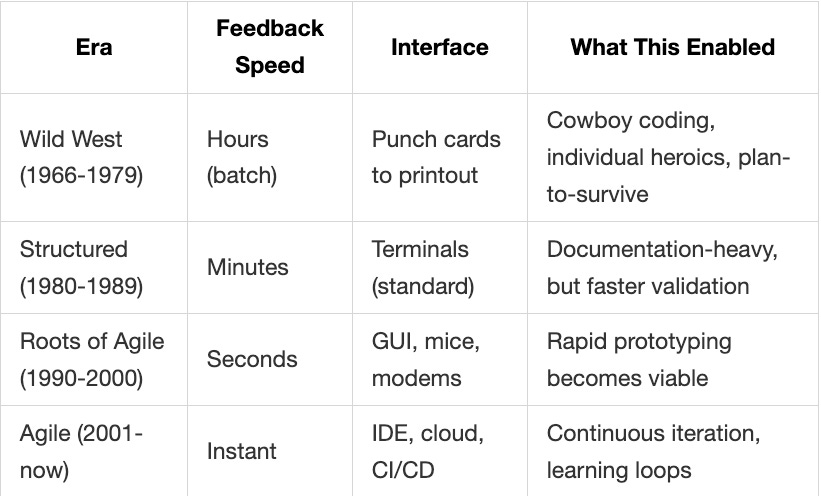

I’ve been digging into the history of software engineering, and I keep hitting the same pattern: economic constraints determined the practices we adopted, not some grand epiphany from a few enlightened people. When trying things was expensive, we planned more, and we tolerated uncertainty less. When things got cheap, we experimented more. And that explains more than you’d expect.

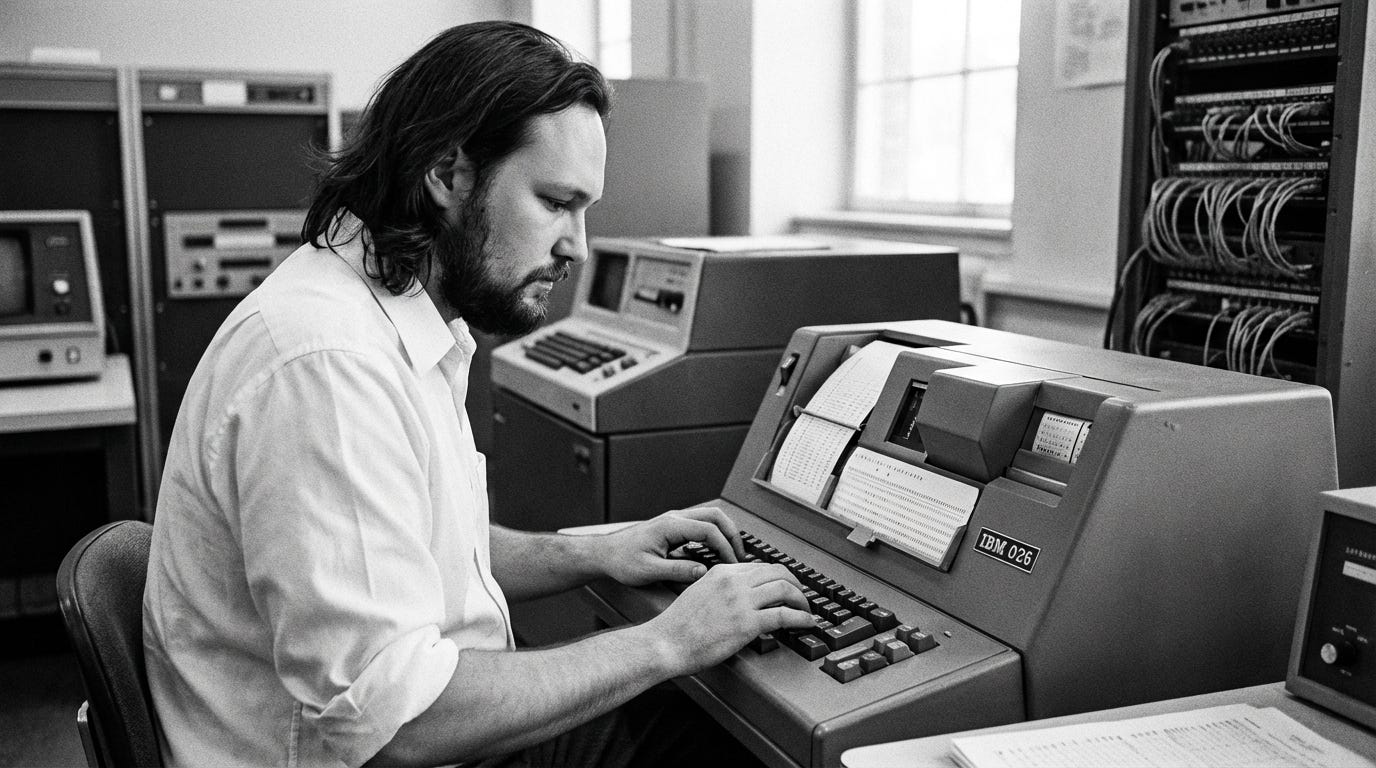

The Wild West (1966-1979)

At the beginning of time, there wasn’t really a thing called “software.” People were managing machines. The line between hardware and software was basically invisible, and nobody was thinking about development methodologies because... there weren’t any.

The setup was simple: you had people who wrote the programs, people who key-punched the solutions onto cards, and people who operated the computers. The work was split like that because machines were astronomically expensive compared to people. In that context, you’d be nuts not to optimize for machines, rather than humans.

On the other hand, programmers were total cowboys. No formal processes, no standards, no documentation to speak of. You picked your own tools, your own language, your own schedule. If something broke, you fixed it and moved on. If you needed a way to store data, you wrote one from scratch, you wrote one from scratch. Every project was pioneering work (a nerd’s dream), and individual heroics were the norm.

In Wild West to Agile, Jim Highsmith put it well:

“Extremely costly computing performance led us to optimize machine performance over people performance.”

So yes, people planned. They triple-checked logic on paper before committing to a single card. But planning wasn’t what defined this era, it was the chaos, the experimentation, the making-it-up-as-you-go. Planning was just a survival mechanism within that chaos, because when iteration costs you hours (if not days) and real money, you can’t afford to guess.

Terminals Made It Faster, but Not Fast Enough (1980-1989)

The 1980s brought green screen terminals, and suddenly, feedback dropped from hours to minutes. You could type a command and see a response. You could test an idea without waiting for the next batch run.

But “faster” didn’t mean “fast.” And the industry had just lived through a decade of cowboy chaos. Projects were failing. Budgets were exploding. The reaction was predictable: let’s control the heck out of everything!

This is when Waterfall took root. Each phase had to be fully completed and signed off on before the next one could begin. A real project under this model meant months of writing detailed requirement documents, user specifications, and design docs before anyone touched a keyboard. The idea was naively simple: if you nail the plan, the execution takes care of itself.

Fun fact: Waterfall’s sequential approach was not what its originator, Winston Royce, had in mind. In his famous paper from 1970, Royce was actually critical of the sequential waterfall approach and advocated for iteration. The term “waterfall” wasn’t even used until 1976. What happened in the 1980s was that this misunderstood sequential model became codified and mandated.

Companies mandated these processes top-down. “Design before coding” became the rule, and CASE tools (Computer-Aided Software Engineering) emerged to try to automate parts of the structured design process. The bureaucracy was real, but it made sense if you’d just watched projects burn from lack of structure.

In the Structured Era, Waterfall was the industry’s Xanax for complexity-induced anxiety, demanding certainty upfront. And honestly, given the economics, it made sense. Mistakes were still expensive enough that heavy planning remained the safer bet.

Some early rebellious ideas for iterative approaches did start appearing in this era, though. People could see the potential. But the cost of getting things wrong still pushed teams toward “plan everything first, ask questions never.”

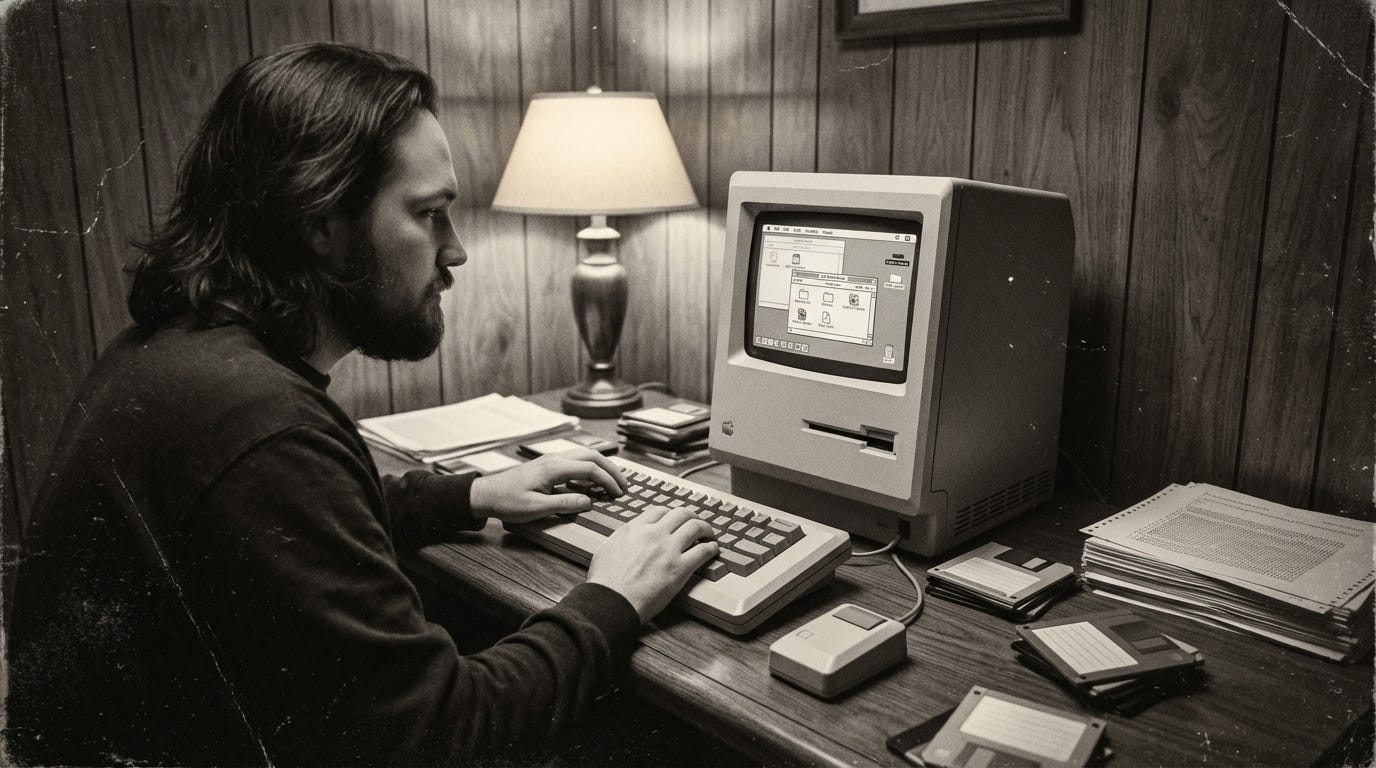

Then Things Got Cheap (1990-2000)

The 90s changed everything. GUIs, mice, modems, and the early web compressed feedback from minutes to seconds. Something clicked.

People were drowning in documentation. After a decade of the Structured Era, developers were fed up with writing 200-page requirement specs for features that were obsolete by the time they shipped. The frustration was real, and the economics finally gave them an alternative.

With seconds-fast feedback, you could finally build something small, see what happens, and adjust. Rapid prototyping became economically viable for the first time because the cost of trying something dropped far enough to make experimentation cheaper than extensive planning.

The practices that emerged reflected this shift. Teams started breaking projects into smaller, manageable chunks and delivering incrementally instead of in one big bang at the end. Customers got pulled into the process throughout, not just at kickoff and delivery (though old habits die hard). Teams began organizing themselves rather than waiting for mandates from above. The philosophical priority was flipping: make it work first, document it later.

The world had also gotten more uncertain. The internet connected everything. Requirements changed faster than you could document them. And the old playbook of “plan everything, then execute” started falling apart, because by the time you finished planning, the world had moved.

This era planted the seeds for everything that followed: test-driven development, refactoring as a discipline, continuous integration, and eventually DevOps. Most of these matured in the 2000s, but the 90s is where the thinking shifted. The question went from “how do we plan better?” to “how do we learn faster?”

When Iteration Becomes (Almost) Free (2001-Now)

In 2001, seventeen developers met at a ski resort in Utah and wrote the Agile Manifesto. It gets romanticized as a revolutionary moment, but really, it was formalizing what the economics had already made possible. The 90s showed that fast feedback enabled iterative work. The Manifesto gave it a name and a set of principles.

Then the infrastructure caught up. AWS launched EC2 in 2006, and suddenly, you didn’t need to buy servers to test an idea. Docker arrived in 2013 and made environments reproducible and disposable. CI/CD pipelines automated the build-test-deploy cycle. Feature flags let you ship code to production without exposing it to users. Each of these reduced the cost of trying things. Together, they made iteration almost free.

The practices reflected this. Test-driven development makes no sense when running a single test takes hours, but it becomes obvious when your suite runs in seconds. Continuous integration was impossible without automated builds and shared repositories, and trivial once both became standard. By 2011, Amazon was reportedly deploying to production every 11.6 seconds. That kind of continuous deployment would have been pure madness in any previous era.

But the problems themselves also got harder. Systems became distributed, user expectations shifted constantly, and markets moved faster than any planning cycle could keep up with. Software stopped being a complicated engineering problem, one you could analyze and predict your way through, and became a genuinely complex one, where outcomes are emergent and cause-and-effect only makes sense in hindsight.

Dave Snowden’s Cynefin framework captures this well. In complicated systems, you can analyze and plan your way to a solution. In complex systems, you can’t. The only way forward is to probe, sense, and respond: try something small, observe what happens, and adapt. That’s not just agile philosophy. It’s a survival strategy for a world where you genuinely cannot predict what’s going to work until you try it.

When iteration is almost free, probe-sense-respond stops being a nice idea and becomes the cheapest, fastest way to learn.

The AI Era: What Happens When Iteration Costs Collapse Again? (The Present)

We’re living through another cost collapse right now.

Tools like Lovable, V0, and Replit let you go from idea to working prototype in minutes. AI-assisted coding tools like Cursor let you explore implementations, refactor architectures, and generate throwaway code at a speed that would have felt absurd even two years ago. I wrote about my own experience redesigning a feature with Cursor and Claude, and the thing that struck me most wasn’t the quality of the output but how cheap it was to just try things.

The cost of “I wonder if this would work” is collapsing, and with it, the entire calculus of when to plan versus when to experiment.

Remember probe, sense, and respond from the Cynefin framework? AI makes the “probe” step dramatically cheaper. You can generate five prototypes in the time it used to take to spec one. You can explore three different architectures before committing, instead of debating which one to bet on in a meeting room. When probing costs almost nothing, you probe more, and you learn faster.

If the pattern holds (and I think it does), new practices will emerge that are currently unthinkable. Just like TDD was unthinkable in the punch card era, and continuous deployment was unthinkable before the cloud. I don’t know exactly what those practices will look like. Nobody in 1970 predicted pair programming or infrastructure-as-code. The practices emerge from the economics, not from thought leadership.

But the direction is clear: we’re entering an era where experimentation becomes so cheap that not trying things will be more expensive than trying them. The teams that internalize this first will define what comes next.

What This Actually Means for You

There’s a practical lesson buried in this history, and it goes beyond “agile good, waterfall bad.”

Stop blaming culture for methodology problems. If your team plans too much and iterates too little, the first question to ask isn’t “do we have the right mindset?” but “what’s making iteration expensive for us?” Maybe it’s a slow CI pipeline. Maybe it’s a painful deployment process. Maybe it’s a review process that takes three days. Fix the economics, and the methodology will follow. Listen to the “Why Deleting Your Backlog Makes You Ship Faster (CEO Explains)” episode on the Product Engineers podcast to learn about the concept of “Iteration Factory”.

Examine your own iteration costs. Where are your feedback loops longest? That’s where you’re economically forced into planning-heavy mode, whether you want to be or not. The answer isn’t about willpower but about making iteration cheaper in that specific area.

Don’t cargo-cult practices from different economic contexts. A startup with a 5-minute deploy pipeline and a bank with a quarterly release cycle face fundamentally different iteration economics. The “right” methodology depends on what you can afford to try.

Ask the most powerful question: what could you do if your iteration cost dropped another 10x? That’s not a hypothetical, because AI is making it real. And if you don’t have an answer yet, that’s the first problem to solve.

The Real Lesson

The history of software engineering isn’t a story of smart people gradually discovering the “right” way to build things, but rather one of economic forces making certain approaches viable and others wasteful.

Waterfall wasn’t wrong but rational for its era, just like agile isn’t inherently right but simply rational for ours. Whatever comes next won’t be right either. It’ll be whatever the economics of near-zero iteration costs make possible.

So the next time someone mocks waterfall in a conference talk, remember: they’re mocking people who made the most rational choice available to them. We’re all doing the same thing. The only difference is the price of trying.